𝐌𝐞𝐬𝐬𝐚𝐠𝐞 𝐐𝐮𝐞𝐮𝐞𝐢𝐧𝐠 𝐏𝐚𝐭𝐭𝐞𝐫𝐧𝐬

Message queues allow for asynchronous communication between services in a decoupled manner, and aid in addressing architectural concerns such as durability, reliability and availability.

There are many ways in which message queues can be utilised within an architecture, below are a few patterns, each suited to a different problem scenario with its own trade-offs:

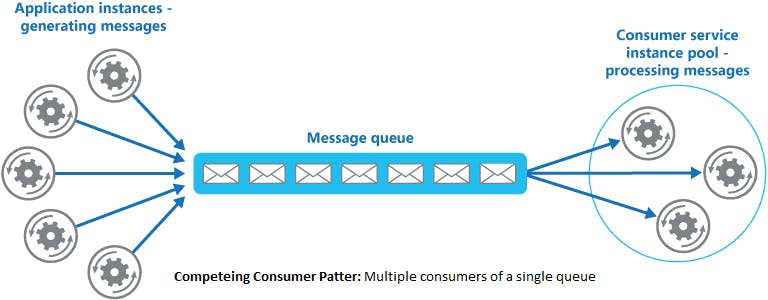

𝐓𝐡𝐞 𝐜𝐨𝐦𝐩𝐞𝐭𝐢𝐧𝐠 𝐜𝐨𝐧𝐬𝐮𝐦𝐞𝐫 𝐩𝐚𝐭𝐭𝐞𝐫𝐧: De-queueing messages off a queue in a synchronous fashion by a single consumer becomes problematic when there is a sudden spike of messages as the messaging system becomes inundated with messages and queuing times increase. This presents a requirement for scalability.

Scalability in such cases is accomplished by deploying multiple consumers that compete to read messages off the same queue and process them concurrently.

The application of this pattern can only be possible when messages can be processed independently of each other as the consumption of messages by different consumers concurrently imposes a restriction on the ability to process messages in an ordered fashion.

This strategy does not always guarantee complete scalability as there is a limit to the number of messages that can be held within a single queue and the presence of multiple consumers may not allow for enough messages to be cleared prior to nearing queue limits.

𝐐𝐮𝐞𝐮𝐞-𝐛𝐚𝐬𝐞𝐝 𝐥𝐨𝐚𝐝 𝐥𝐞𝐯𝐞𝐥𝐥𝐢𝐧𝐠 𝐩𝐚𝐭𝐭𝐞𝐫𝐧: Alternatives of handling spikes in load or bottlenecks are available when scalability is not an option. For example, a system may be dependent on a third-party service which is incapable of handling the level of load placed on it.

This problem scenario can be mitigated by asynchronously communicating with such service via a messaging queue that allows for messages to be passed in a consistent steady fashion as logic can be applied to control the rate at which messages are passed along to the targeted service.

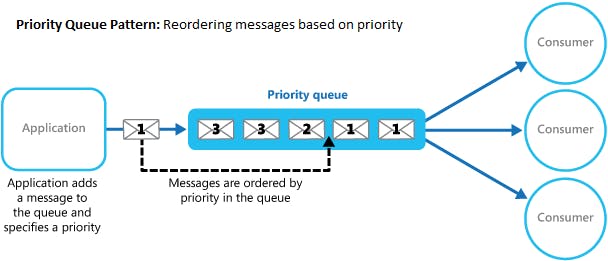

𝐓𝐡𝐞 𝐩𝐫𝐢𝐨𝐫𝐢𝐭𝐲 𝐐𝐮𝐞𝐮𝐞 𝐩𝐚𝐭𝐭𝐞𝐫𝐧: Messages are often de-queued and processed in a first-in/first-out order however there are cases were different messages are of different priorities, in such cases the most recent message enqueued is of higher priority than an existing message that resides on the queue and it is not desired to delay its processing until messages that sit before it are cleared as there can be timing requirements to be met.

This problem scenario can be handled by either re-ordering messages within the queue or dedicating different queues to different priorities.

Potential downsides of this pattern include message starvation where a constant flow of high-priority messages means that lower-priority messages are never processed.

The prioritisation process and/or re-ordering of messages can in itself increase latency as additional processing is required prior to enqueuing messages.

Cloud providers such as Azure offer multiple messaging services such as Azure Service Bus and Queue storage with the distinguishing factor being the size of the message supported by each service and message retention period.

Open-source messaging systems are available, with two popular systems being RabbitMQ and Kafka with their own comparison points. The Windows operating system ships with its own message queueing system known as MSMQ.